The Agentic Margin: What It Costs vs. What You Earn from AI Assistants

A straightforward way to measure whether your AI helpers are actually worth what you're spending on them.

When companies build (and deploy) AI agents, they often focus on capabilities first and economics second.

This is a bit like hiring employees based solely on their skills without considering their salary requirements. It’s possible, but it’s a recipe for trouble down the line.

AI agents consume resources every time they perform tasks. They use computing power, make API calls, LLMs, text-to-speech, avatars, and so much more.

All of these elements cost real money - much more than a SaaS. These costs scale not just with usage, but also with the type of inputs in ways that aren't always predictable or linear.

The agentic margin (AM) and agentic margin ratio (AMR) are practical measures that tells you whether your investment in AI is actually creating value or quietly draining your resources.

In our recent conversations we have seen too many companies rush to implement AI agents only to pull back months later when they realize the agents cost more to operate than the value they create.

Without tracking agentic margin from day one, these issues often remain hidden.

What makes agentic margin different from other metrics (including SaaS metrics)?

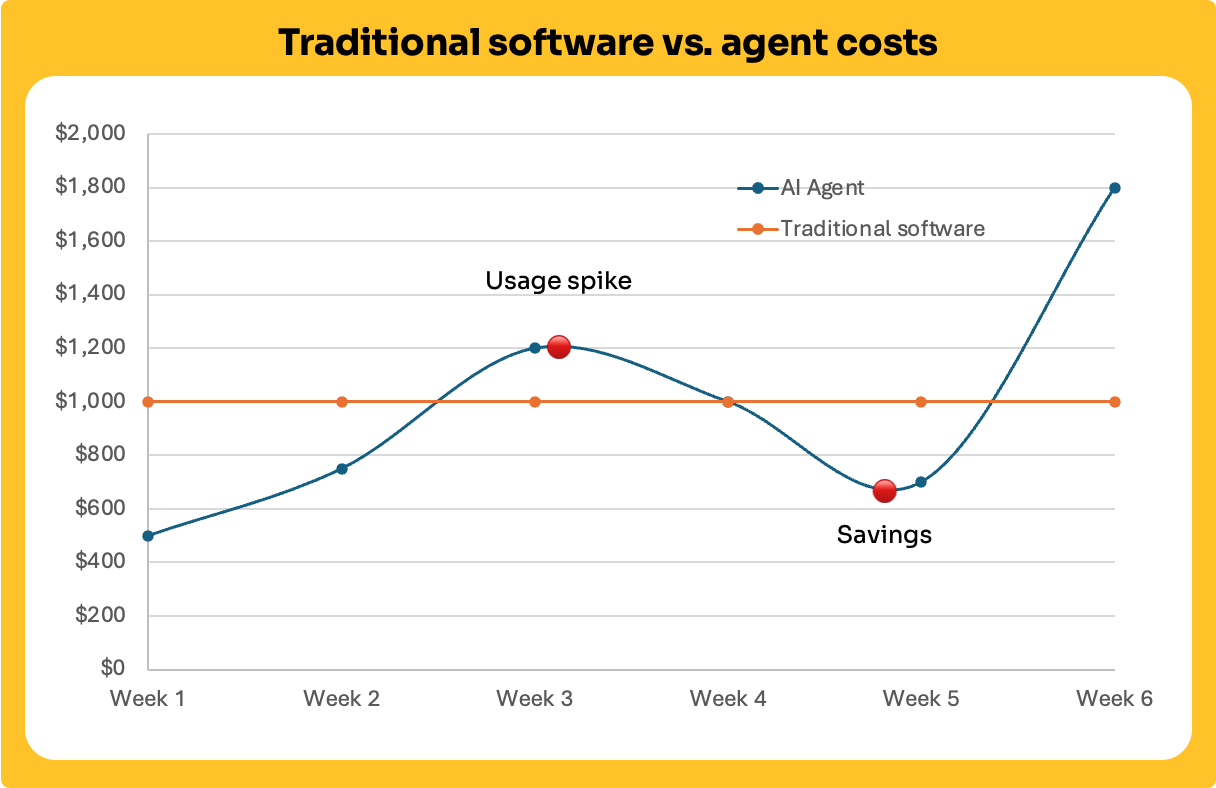

Unlike traditional software that has predictable licensing costs, AI agents have a unique economic profile:

They typically charge by usage (tokens, API calls, compute time)

They consume multiple services simultaneously

Their costs fluctuate based on task complexity

Their usage patterns can change as user behavior evolves

The Components of Agent Costs 💸

To calculate your agentic margin accurately, you need to understand all the ingredients that contribute to your agent's operating costs:

Language models: The "thinking" capabilities of your agent

Voice processing: Converting speech to text and text to speech

Visual capabilities: Understanding or generating images

Infrastructure: Servers and data storage

Development: Building and improving your agents

Human oversight: People who monitor and train agents

A Tale of Two Support Agents

Here is a real example (with details scrubbed) that we encountered when helping a software company evaluate their customer support agents:

Agent A used meager resources, sticking to text-only responses and simple database lookups. It cost about $0.22 per customer interaction while resolving 65% of tickets without human intervention, while bringing in $5 per resolved ticket in revenue.

Agentic Margin (AM): $5 - $0.22 = $4.78. That's an AMR of 95.6%. VERY healthy!

Agent B used advanced features: voice capabilities, screenshot analysis, and could generate tutorial videos on demand. It cost $3.20 per interaction but resolved 85% of tickets, resulting in $5.50 revenue per resolved ticket.

Agentic Margin (AM): $5.50 - $3.20 = $2.30, with an AMR of 31% - much lower than that of Agent A.

Even when considering the resolution rate, and assuming only resolved tickets get you paid, you’d still be making a better profit:

When building these agents, the company focused only on resolution rates and almost standardized on Agent B before realizing that Agent A's superior agentic margin made it a better value for deploying at a greater scale.

It’s also true that as the cost of LLMs and tools go down, Agent B’s margins could improve.

That’s why you have to continuously check the health of your agents, with different variants and models.

Try this today: Making your agents healthier

Here are some practical ways to improve your agentic margin:

Right-size your capabilities: Match agent features to actual user needs. If you are not sure which features/abilities get used, start tracking sooner rather than later.

Continuously monitor capabilities: As models get cheaper or develop new capabilities, you should have a continuous check of your agents’ metrics. You could be underpricing and overdelivering (or vice-versa).

Cache common responses: Don't regenerate the same answers repeatedly to prevent expensive API access

Set sensible guardrails: Prevent unnecessary use of expensive capabilities. Don’t allow users to paste in very long texts or very large images.

Monitor usage patterns: Watch for inefficient processes or unexpected costs. If you can’t monitor this on a per-interaction base, at least try to understand global usage patterns.

Remember This

The companies that succeed with AI won't necessarily be those with the most advanced capabilities, but those who understand the economic realities of deploying AI at scale. By tracking and optimizing your agentic margin, you're not just counting pennies, you're building a sustainable foundation for AI that actually delivers on its promise.

Ready to see it in action? Sign up to our exclusive beta

Well thought and articulated, this clearly shows we need to have AI teams understanding both Revenue side and COGS aspects to accelerate GTM motions. Thanks a ton.

Nice article. It reminded me of a recent post about a startup founder’s clever cost-saving trick. The founder routed API calls through OpenAI, logged all inputs and outputs, and used the data to fine-tune a cheaper open-source model. It’s a brilliant data-driven pivot that maintains high performance while cutting costs. A true win for startups.